Why Query Fan-Out Matters More Than Schema Markup (ChatGPT, Gemini & Perplexity SEO Guide)

AI search engines like ChatGPT, Google Gemini, and Perplexity have changed how millions discover information online. ChatGPT Search now handles over 37.5 million daily searches, Gemini serves 650 million monthly users, and AI Overviews appear in up to 47% of informational queries. But if you're banking on traditional SEO tactics like schema markup or llm.txt files to get cited in these platforms, you're optimizing for the wrong thing.

The reality? LLMs don't work like search engines, and most "AI SEO" advice misses the fundamental mechanism behind how these systems actually retrieve and cite content.

What is Query Fan-Out And Why Does It Matter?

Query fan-out is the process by which AI search platforms split a single user query into multiple sub-queries to gather comprehensive information. When someone asks ChatGPT, "What's the best CRM for small businesses?", the system doesn't search for that exact phrase. Instead, it fans out the query into related searches like "small business CRM features," "affordable CRM pricing," "CRM integration options," and "CRM user reviews".

Here's the critical insight: LLMs retrieve information from existing SERP results, not by crawling the web themselves. They execute these fan-out queries against traditional search indexes (Google, Bing, etc.), scrape the top-ranking pages, and synthesize answers from that content. If your page doesn't rank for the queries that LLMs fan out to, you won't get cited, period.

Research analyzing 173,000+ URLs found that pages ranking for both main queries and fan-out queries are 161% more likely to be cited in AI Overviews compared to pages ranking only for main keywords. Even more surprising: ranking for fan-out queries alone (without the main keyword) makes you 49% more likely to earn citations than ranking exclusively for the primary term.

Busting Common AI SEO Myths

Myth 1: "Just Create Quality Content"

Quality content matters, but it's not enough. If your comprehensive 3,000-word guide doesn't rank in traditional search for the specific sub-queries LLMs fan out to, AI platforms will never see it. You need content that ranks for multiple related queries, not just one primary keyword.

Myth 2: "Schema Markup Guarantees AI Citations"

Schema markup helps search engines understand content structure, but it doesn't guarantee inclusion in AI answers. While a valid schema reduces ambiguity and can support traditional SEO performance (which indirectly affects AI citations), LLMs primarily rely on what's already ranking in SERPs. Your schema won't matter if you're not on page one for fan-out queries.

Myth 3: "Implement llm.txt, and You're Set."

The llm.txt approach assumes LLMs directly crawl your website and respect robots.txt style directives. They don't. AI search platforms execute traditional searches, retrieve top results, and extract information from those pages. Your llm.txt file has zero impact on this process.

How to Actually Optimize for AI Search?

Focus on Fan-Out Query Coverage

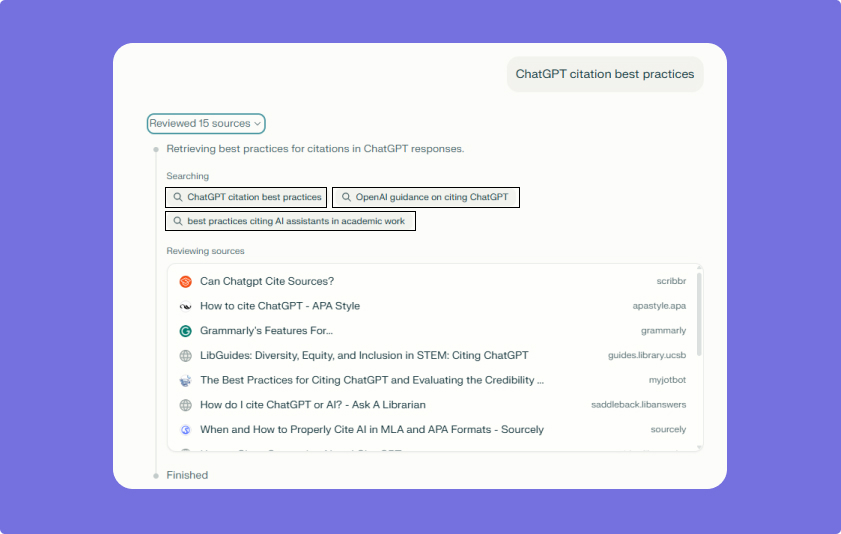

Identify the related questions and sub-topics LLMs might fan out to from your main topic. For "ChatGPT SEO services," fan-out queries might include "how ChatGPT retrieves information," "ChatGPT citation best practices," "tracking ChatGPT mentions," and "ChatGPT vs Google search differences." Create content sections targeting each sub-query with direct, quotable answers.

At Vaphers, our ChatGPT SEO services specifically optimize for query fan-out patterns to maximize citation frequency. We've seen clients achieve 876% increases in AI referral traffic by targeting the right fan-out queries rather than just primary keywords.

Rank in Traditional SERPs First

Since LLMs scrape from search results, traditional SEO fundamentals remain critical. Build topical authority, earn quality backlinks, optimize for E-E-A-T signals, and create content that ranks for both broad and specific query variations. Our Gemini SEO optimization focuses on ranking for the specific queries that Google's AI fans out to when generating AI Overviews.

Structure Content for Extraction

AI systems extract information more effectively from content with clear formatting, standalone sentences that work as quotes, and direct answers to specific questions. Use conversational Q&A formats, bullet points for key facts, and summaries that can be cited independently. This isn't about schema—it's about making your ranked content easy for LLMs to extract and cite.

Cover Topics Comprehensively

The correlation between fan-out query coverage and AI citations is 0.77 (statistically very strong). This means comprehensive subtopic coverage matters more than ranking for a single head term. Build content clusters that address related questions, use cases, comparisons, and implementation details. Our Perplexity SEO strategies leverage this by creating interconnected content that ranks for multiple fan-out variations.

The Bottom Line

AI search optimization isn't about implementing special tags or creating "AI-friendly" content. It's about understanding how these systems actually work: they fan out queries, scrape traditional search results, and cite sources that rank for those expanded searches.

Stop chasing AI SEO myths. Start ranking for the queries that AI platforms fan out to, and you'll naturally appear in their citations. That's the real strategy behind getting cited in ChatGPT, Gemini, and Perplexity, and it requires mastering both traditional SEO and query fan-out patterns.

Want to dominate AI search results? Focus on comprehensive topical coverage, rank for fan-out queries, and structure your content for easy extraction. Everything else is noise.